Game Development: 5. Rendering and Shaders

Geometry

In order to start building a

playable game, we’re going to need to be able to see the game world and the

objects it contains. For 2D games, this involves going through the game’s state

and drawing the Texture2D sprites of the components in it at the specified X, Y

coordinates. Adding the extra dimension, we move into the world of vertices,

polygons and textured faces. I’ve done some work with primitives, creating

vertices, joining them into faces and building them in to simple scene geometry.

MonoGame provides multiple vertex

primitive types and ways of building collections of them into scene geometry. I

use the VertexPositionNormalTexture structure to

build geometry with vertices that store position, normal, and texture

coordinate data. I wrapped this in my own struct that also stores a reference

to the texture to be applied to the vertex:

public struct TextureVertex

{

public VertexPositionNormalTexture vertex;

public TextureName texture;

public TextureVertex(VertexPositionNormalTexture vert, TextureName tex)

{

vertex = vert;

texture = tex;

}

}

I created a geometry services

class to perform the task of building vertices into geometry. As every 3D

object is built from triangular polygons, this class builds four-sided Quad

faces as a pair of triangle polygons from four input vertices:

public static List<TextureVertex> BuildQuad(Vector3[] orderedVertices, bool splitFromTopLeft/*Determines whether quad is split into tris from top left corner or top right*/, Vector3 normal, TextureName topTriTexture, TextureName bottomTriTexture)

{

//Quad - orderedVertices:

// 0-----1

// | |

// | |

// 3-----2

List<TextureVertex> result = new List<TextureVertex>();

Vector2[] textureCoordinatesTopTriangle = TextureAtlas.GetInstance().GetTextureCoordinates(topTriTexture);

Vector2[] textureCoordinatesBottomTriangle = TextureAtlas.GetInstance().GetTextureCoordinates(bottomTriTexture);

if (orderedVertices.Length == 4)//Must have 4 vertices for a quad

{

//Determine if quad is split 0-2 (top left) of 1-3 (top right)

if (splitFromTopLeft)

{

for (int i = 0; i <= 2; i++)//Top right triangle

{

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[i], normal, textureCoordinatesTopTriangle[i]), topTriTexture));

}

//Bottom left triangle

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[2], normal, textureCoordinatesBottomTriangle[2]), bottomTriTexture));

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[3], normal, textureCoordinatesBottomTriangle[3]), bottomTriTexture));

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[0], normal, textureCoordinatesBottomTriangle[0]), bottomTriTexture));

}

else

{

//Top Left triangle

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[3], normal, textureCoordinatesTopTriangle[3]), topTriTexture));

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[0], normal, textureCoordinatesTopTriangle[0]), topTriTexture));

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[1], normal, textureCoordinatesTopTriangle[1]), topTriTexture));

//Bottom right triangle

for (int i = 1; i <= 3; i++)

{

result.Add(new TextureVertex(new VertexPositionNormalTexture(orderedVertices[i], normal, textureCoordinatesBottomTriangle[i]), bottomTriTexture));

}

}

}

return result;

}

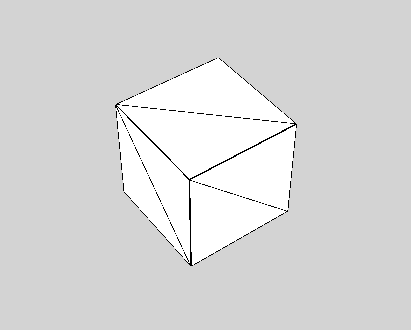

Using the geometry services class

to build faces from a set of vertices, I can create 3D objects in my scene. In

my Cube geometry class, I use this service to create the 6 faces of the cube

from an array of 4 top and 4 bottom vertex coordinates, i.e.:

//Create Top Face

Vector3[] quad = { topVertices[1], topVertices[2], topVertices[3], topVertices[0] };

faces.AddRange(GeometryServices.BuildQuad(quad, true, normalTop,BlockTextures[Direction.Up], BlockTextures[Direction.Up]));

With my Cube geometry built from

a set of faces, I can prepare my object for rendering by building the vertex and

index buffers. Using vertex and index buffers, we can reduce the amount of data

sent to the graphics card by only storing each unique vertex once in the vertex

buffer, then referencing the index of the vertex for each polygon it appears in

in the index buffer. For example, a quad face is made of two triangular

polygons. If the overlapping corners of these polygons use the same texture

coordinates, then there is no need to pass the vertices to the graphics card

twice. With the vertex and index buffers set, we can render the geometry in the

scene:

public void Render(GraphicsDevice graphicsDevice)

{

graphicsDevice.SetVertexBuffer(VertexBuffer);

graphicsDevice.Indices = IndexBuffer;

if (Vertices.Length > 0)

{

graphicsDevice.DrawIndexedPrimitives(PrimitiveType.TriangleList, 0, 0, Vertices.Length, 0, Indices.Length / 3);

}

}

Using my Cube object, I did some

experimenting with voxel-based terrain generation and was able to add some

optimizations to my scene by only adding Cube faces to the vertex buffer if

they were exposed i.e. not blocked by an adjacent Cube. I implemented a

multi-level approach to building the buffers: first for an individual cube,

using the results to build the buffers for a terrain Chunk, and finally for the

whole scene. The buffers were rebuilt whenever a Cube was added or removed from

the scene. I tested this by generating some terrain using a Perlin Noise

generator:

Shaders

With the processes to build some

scene geometry in place, the next step is to instruct the graphics card how to

interpret the data and draw the scene. To do this, we use shaders. Shaders are

programmable instruction sets used as part of the graphics pipeline to

determine how the data from the game is interpreted and displayed by the GPU,

and usually comprise of two core components: a vertex shader, and a

pixel/fragment shader. In the rendering pipeline, the CPU runs a Draw call,

sending scene geometry and data along with shaders to the GPU. The vertex

shader receives a stream of vertices from the geometry, interprets and modifies

them (e.g. transforming model coordinates to world coordinates using the Camera’s

World, View and Projection matrices). The triangle faces of the output geometry

are rasterized into pixels (“fragments”), and passed to the pixel shader, which

uses the pixel data to determine how each pixel on screen should be coloured.

As MonoGame includes SharpDX, a

DirectX API, for rendering, High-Level Shading Language (HLSL) is used to write

shaders. For my first attempt at building a rendering workflow using shaders, I

have been looking at creating a pre-lighting renderer. The tutorials I followed

can be found here: http://rbwhitaker.wikidot.com/hlsl-tutorials,

http://www.catalinzima.com/xna/tutorials/deferred-rendering-in-xna/,

https://www.packtpub.com/books/content/advanced-lighting-3d-graphics-xna-game-studio-40.

This approach splits lighting

calculations from the rendering of the rest of the scene, performing lighting

calculations only on visible pixels the light affects. The technique performs

multiple passes of the scene, the first extracting the data necessary to do

lighting calculations, which can be used in subsequent passes to render the

final scene.

First, the Renderer creates a

geometry buffer which stores the depth and normal data of the geometry in the

scene. This data is stored as colour information in Texture2D objects. Rather

than rendering to the screen, the GPU is instructed to render to Texture2Ds:

graphicsDevice.SetRenderTargets(_normalTarget, _depthTarget);

Once the render targets are set,

the shader parameters (World, View and Projection matrices) are set, and the scene

(i.e. all objects in the GameState that implement the IRenderable

interface is rendered using the depthNormal shader:

ShaderManager.GetInstance().DepthNormal.Parameters["World"].SetValue(Matrix.CreateTranslation(0, 0, 0)); ShaderManager.GetInstance().DepthNormal.Parameters["View"].SetValue(activeCamera.CameraViewMatrix); ShaderManager.GetInstance().DepthNormal.Parameters["Projection"].SetValue(activeCamera.CameraProjectionMatrix);

//HLSL Shader:

float4x4 World;

float4x4 View;

float4x4 Projection;

struct VertexShaderInput

{

float4 Position : SV_POSITION;

float3 Normal : NORMAL0;

};

struct VertexShaderOutput

{

float4 Position : SV_POSITION;

float2 Depth : TEXCOORD0;

float3 Normal : TEXCOORD1;

};

VertexShaderOutput VertexShaderFunction(VertexShaderInput input)

{

VertexShaderOutput output;

float4x4 viewProjection = mul(View, Projection);

float4x4 worldViewProjection = mul(World, viewProjection);

output.Position = mul(input.Position, worldViewProjection);

output.Normal = mul(input.Normal, World);

// Position's z and w components correspond to the distance

// from camera and distance of the far plane respectively

output.Depth.xy = output.Position.zw;

return output;

}

// Rendering to two textures, not direct to screen

struct PixelShaderOutput

{

float4 Normal : COLOR0;

float4 Depth : COLOR1;

};

PixelShaderOutput PixelShaderFunction(VertexShaderOutput input)

{

PixelShaderOutput output;

//camera / far plane

//value between 0 and 1 for depth

output.Depth = input.Depth.x / input.Depth.y;

//X, Y and Z components of normal

// moved from (-1 to 1) range to (0 to 1) range

output.Normal.xyz = (normalize(input.Normal).xyz / 2) + .5;

output.Depth.a = 1;

output.Normal.a = 1;

return output;

}

technique Technique1

{

pass Pass1

{

VertexShader = compile vs_4_0_level_9_3 VertexShaderFunction();

PixelShader = compile ps_4_0_level_9_3 PixelShaderFunction();

}

}

The depth and normal buffers are

passed to the lighting shader,

and the Renderer loops through the lights in the scene, calculating the

lighting for each visible geometry pixel. Each point light’s area of effect is

approximated by a sphere, which the shader uses in combination with the

geometry buffer to apply colour and lighting intensity to each pixel within its

influence.

With the scene’s lighting

information stored in a light map texture, the Renderer does a final pass of

the whole scene, combining the lighting with the scene geometry, and yielding a

finished lit scene. While more advanced renderers would use the lighting information

with material shaders to create materials with depth (using normal maps) and

variable glossiness/roughness, currently my final pass scene shader simply samples

the lighting from the lightmap and combines it with the textures applied to

each face:

Quick Tip: Shaders in MonoGame

When I started writing shaders

for my game, I was having difficulty compiling and using them with the content

pipeline. Here are the steps I followed to get shaders successfully working:

1.

Use the MonoGame Pipeline tool to manage and

compile your shaders rather than a ContentProject. If you are currently using a

MonoGame ContentProject, set the .fx files Compile action to “Do Not Compile”

and open the ContentProject with Pipeline.

2.

Create the shaders and compile them using

Pipeline, then open the output folder storing the shader .xnb files.

3.

Create a content folder in your game Project and

copy the shader .xnbs into an Effects folder.

4.

Now load the shaders into your code i.e.:

_ambient = ScreenManager.GetInstance().ContentManager.Load<Effect>(@"Effects/AmbientLight");

Subscribe to:

Post Comments

(

Atom

)

No comments :

Post a Comment